The marriage of science, technology, and national security took a major step forward during and following World War II. The secret Manhattan project, marshaling the energies and time of thousands of scientists and engineers, showed that it was possible for military needs to effectively mobilize and conduct coordinated research into fundamental and applied topics, leading to the development of the plutonium bomb and eventually the hydrogen bomb. (Richard Rhodes' memorable The Making of the Atomic Bomb provides a fascinating telling of that history.) But also noteworthy is the coordinated efforts made in advanced computing, cryptography, radar, operations research, and aviation. (Interesting books on several of these areas include Stephen Budiansky's Code Warriors: NSA's Codebreakers and the Secret Intelligence War Against the Soviet Union and Blackett's War: The Men Who Defeated the Nazi U-Boats and Brought Science to the Art of Warfare Warfare, and Dyson's Turing's Cathedral: The Origins of the Digital Universe.) Scientists served the war effort, and their work made a material difference in the outcome. More significantly, the US developed effective systems for organizing and directing the process of scientific research -- decision-making processes to determine which avenues should be pursued, bureaucracies for allocating funds for research and development, and motivational structures that kept the participants involved with a high level of commitment. Tom Hughes' very interesting Rescuing Prometheus: Four Monumental Projects that Changed Our World tells part of this story.

But what about the peace?

During the Cold War there was a new global antagonism, between the US and the USSR. The terms of this competition included both conventional weapons and nuclear weapons, and it was clear on all sides that the stakes were high. So what happened to the institutions of scientific and technical research and development from the 1950s forward?

Stuart Leslie addressed these questions in a valuable 1993 book, The Cold War and American Science: The Military-Industrial-Academic Complex at MIT and Stanford. Defense funding maintained and deepened the quantity of university-based research that was aimed at what were deemed important military priorities.

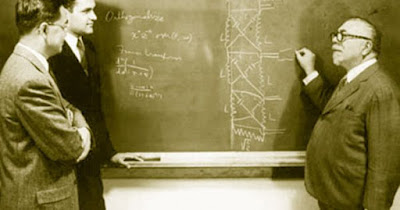

The armed forces supplemented existing university contracts with massive appropriations for applied and classified research, and established entire new laboratories under university management: MIT's Lincoln Laboratory (air defense); Berkeley's Lawrence Livermore Laboratory (nuclear weapons); and Stanford's Applied Electronics Laboratory (electronic communications and countermeasures). (8)

In many disciplines, the military set the paradigm for postwar American science. Just as the technologies of empire (specifically submarine telegraphy and steam power) once defined the relevant research programs for Victorian scientists and engineers, so the military-driven technologies of the Cold War defined the critical problems for the postwar generation of American accidents and engineers.... These new challenges defined what scientists and engineers studied, what they designed and built, where they went to work, and what they did when they got there. (9)

And Leslie offers an institutional prediction about knowledge production in this context:

Just as Veblen could have predicted, as American science became increasingly bound up in a web of military institutions, so did its character, scope, and methods take on new, and often disturbing, forms. (9)The evidence for this prediction is offered in the specialized chapters that follow. Leslie traces in detail the development of major research laboratories at both universities, involving tens of millions of dollars in funding, thousands of graduate students and scientists, and very carefully focused on the development of sensitive technologies in radio, computing, materials, aviation, and weaponry.

No one denied that MIT had profited enormously in those first decades after the war from its military connections and from the unprecedented funding sources they provided. With those resources the Institute put together an impressive number of highly regarded engineering programs, successful both financially and intellectually. There was at the same time, however, a growing awareness, even among those who had benefited most, that the price of that success might be higher than anyone had imagined -- a pattern for engineering education set, organizationally and conceptually, by the requirements of the national security state. (43)Leslie gives some attention to the counter-pressures to the military's dominance in research universities that can arise within a democracy in the closing chapter of the book, when the anti-Vietnam War movement raised opposition to military research on university campuses and eventually led to the end of classified research on many university campuses. He highlights the protests that occurred at MIT and Stanford during the 1960s; but equally radical protests against classified and military research happened in Madison, Urbana, and Berkeley.

This is a set of issues that are very resonant with Science, Technology and Society studies (STS). Leslie is indeed a historian of science and technology, but his approach does not completely share the social constructivism of that approach today. His emphasis is on the implications of the funding sources on the direction that research in basic science and technology took in the 1950s and 1960s in leading universities like MIT and Stanford. And his basic caution is that the military and security priorities associated with this structure all but guaranteed that the course of research was distorted in directions that would not have been chosen in a more traditional university research environment.

The book raises a number of important questions about the organization of knowledge and the appropriate role of universities in scientific research. In one sense the Vietnam War is a red herring, because the opposition it generated in the United States was very specific to that particular war. But most people would probably understand and support the idea that universities played a crucial role in World War II by discovering and developing new military technologies, and that this was an enormously important and proper role for scientists in universities to play. Defeating fascism and dictatorship was an existential need for the whole country. So the idea that university research is sometimes used and directed towards the interests of national security is not inherently improper.

A different kind of worry arises on the topic of what kind of system is best for guiding research in science and technology towards improving the human condition. In grand terms, one might consider whether some large fraction of the billions of dollars spent in military research between 1950 and 1980 might have been better spent on finding ways of addressing human needs directly -- and therefore reducing the likely future causes of war. Is it possible that we would today be in a situation in which famine, disease, global warming, and ethnic and racial conflict were substantially eliminated if we had dedicated as much attention to these issues as we did to advanced nuclear weapons and stealth aircraft?

Leslie addresses STS directly in "Reestablishing a Conversation in STS: Who’s Talking? Who’s Listening? Who Cares?" (link). Donald MacKenzie's Inventing Accuracy: A Historical Sociology of Nuclear Missile Guidance tells part of the same story with a greater emphasis on the social construction of knowledge throughout the process.

(I recall a demonstration at the University of Illinois against a super-computing lab in 1968 or 1969. The demonstrators were appeased when it was explained that the computer was being used for weather research. It was later widely rumored on the campus that the weather research in question was in fact directed towards considering whether the weather of Vietnam could be manipulated in a militarily useful way.)

(I recall a demonstration at the University of Illinois against a super-computing lab in 1968 or 1969. The demonstrators were appeased when it was explained that the computer was being used for weather research. It was later widely rumored on the campus that the weather research in question was in fact directed towards considering whether the weather of Vietnam could be manipulated in a militarily useful way.)