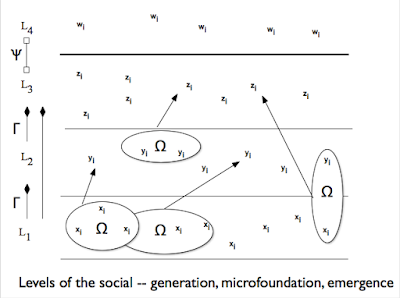

Quite a few recent posts have examined the power and flexibility of ABM models as platforms for simulating a wide range of social phenomena. Joshua Epstein is one of the high-profile contributors to this field, and he is famous for making a particularly strong claim on behalf of ABM methods. He argues that “generative” explanations are the uniquely best form of social explanation. A generative explanation is one that demonstrates how an upper-level structure or causal power comes about as a consequence of the operations of the units that make it up. As an aphorism, here is Epstein's slogan: "If you didn't grow it, you didn't explain it."

Here is how he puts the point in a Brookings working paper, “Remarks on the foundations of agent-based generative social science” (link; also chapter 1 of Generative Social Science: Studies in Agent-Based Computational Modeling):

"To the generativist, explaining macroscopic social regularities, such as norms, spatial patterns, contagion dynamics, or institutions requires that one answer the following question:

"How could the autonomous local interactions of heterogeneous boundedly rational agents generate the given regularity?"Accordingly, to explain macroscopic social patterns, we generate—or “grow”—them in agent models." (1)

And Epstein is quite explicit in saying that this formulation represents a necessary condition on all putative social explanations: "In summary, generative sufficiency is a necessary, but not sufficient condition for explanation." (5).

However, most safety experts agree that the social and organizational characteristics of the dangerous activity are the most common causes of bad safety performance. Poor supervision and inspection of maintenance operations leads to mechanical failures, potentially harming workers or the public. A workplace culture that discourages disclosure of unsafe conditions makes the likelihood of accidental harm much greater. A communications system that permits ambiguous or unclear messages to occur can lead to air crashes and wrong-site surgeries. (link)I would say that this organizational approach is a legitimate schema for social explanation of an important effect (the occurrence of large technology failures). Further, it is not a generativist explanation; it does not originate in a simplification of a particular kind of failure and demonstrate through iterated runs that failures occur X% of the time. Rather, it is based on a different kind of scientific reasoning, based on causal analysis grounded in careful analysis and comparison of cases. Process tracing (starting with a failure and working backwards to find the key causal branches that led to the failure) and small-N comparison of cases allows the researcher to arrive at confident judgments about the causes of technology failure. And this kind of analysis can refute competing hypotheses: "operator error generally causes technology failure", "poor technology design generally causes technology failure", or even "technological over-confidence causes technology failure". All these hypotheses have defenders; so it is a substantive empirical hypothesis to argue that certain features of organizational deficiency (training, supervision, communications processes) are the most common causes of technological accidents.

Other examples from sociology could be provided as well: Michael Mann's explanation of the causes of European fascism (Fascists), George Steinmetz's explanation of variations in the characteristics of German colonial rule (The Devil's Handwriting: Precoloniality and the German Colonial State in Qingdao, Samoa, and Southwest Africa), or Kathleen Thelen's explanation of the persistence and change in training regimes in capitalist economies (How Institutions Evolve: The Political Economy of Skills in Germany, Britain, the United States, and Japan). Each is explanatory, each identifies causal factors that are genuinely explanatory of the phenomena in question, and none is generativist in Epstein's sense. These are examples drawn from historical sociology and institutional sociology; but examples from other parts of the disciplines of sociology are available as well.

I certainly believe that ABMs sometimes provide convincing and scientifically valuable explanations. The fundamentalism that I'm taking issue with here is the idea that all convincing and scientifically valuable social explanations must take this form -- a much stronger view and one that is not well supported by the practice of a range of social science research programs.

Or in other words, the over-reach of the ABM camp comes down to this: the claims of exclusivity and general adequacy of the simulation-based approach to explanation. ABM fundamentalists claim that only simulations from units to wholes will be satisfactory (exclusivity), and they claim that ABM simulations can always be designed for any problem that are generally adequate to grounding an explanation (general adequacy). Neither proposition can be embraced as a general or universal claim. Instead, we need to recognize the plurality of legitimate forms of causal reasoning in the social sciences, and we need to recognize, along with their strengths, some of the common weaknesses of the ABM approach for some kinds of problems.